Data Systems Group

Our research aims to significantly enhance the efficiency of data-intensive systems and democratize data science, making it accessible to a broader audience. We aspire to achieve this by creating a new generation of systems that empower users to unlock the full potential of their data.

Our Team

Assoc Prof. Radwa El Shawi

Prof. Dr. Sherif Sakr

Miika Hannula

Stefania Tomasiello

Riccardo Tommasini

Kristo Raun

Abdesselam Ferdi

Jan Timko

Mohamed Maher

Mehak Mushtaq Malik

Erick Fiestas

Ali Maharramov

Vikash Maheshwari

Ahmed Wael

Andres Caceres

Kelem Negesi

Karl-Hendrik Veidenberg

Alumni

Prof. Dr. Ahmed Awad

Simon Pierre Dembele

Muhammad Uzair

Hassan Eldeeb

PhD Graduate (2024)

Ali Ghazal

Master's Graduate (2025)

Mohamed Ragab

PhD Graduate (2023)

Fjodor Ševtšenko

Victor Aluko

Nshan Potikyan

Khatia Kilanava

Viacheslav Komisarenko

Simona Micevska

Elkhan Shahverdi

Abdelrhman Eldallal

Youssef Sherif

Hazem Mamdouh

Abdul Wahab

Abdul Hudson Taylor Lekunze

Shota Amashukeli

Sadig Eyvazov

Hasan Tanvir

Kristjan Lõhmus

Fred Boldin

Projects

Completed

ConnectFarms promotes sustainable, integrated crop and livestock farming by combining precision agriculture, circular economy practices, and nature-based solutions. Working across seven countries, the project develops tools and strategies to enhance soil health, animal welfare, and ecosystem services — all in line with the EU’s Farm to Fork strategy.

Simplifies machine learning for healthcare by automating model building and enhancing interpretability. Helps medical professionals create high-quality models and understand their predictions, supporting better decisions and improved patient outcomes.

Advances data management and large-scale machine learning with contributions to RDF benchmarking, online learning, and automated feature engineering. Developed open-source tools like BigFeat and enhanced platforms such as Apache Flink and Spark. Results include top-tier publications, new stream mining solutions, and integration of cutting-edge research into graduate teaching.

Ongoing

An open-source platform to streamline and democratize data science through automation, privacy-preserving federated learning, self-tuning pipelines, and explainable AI—tailored for diverse industry needs.

MachineLearnAthons—an inclusive ML challenge format designed to build data literacy, practical ML skills, and interdisciplinary teamwork among students with diverse backgrounds and experience levels.

Demos

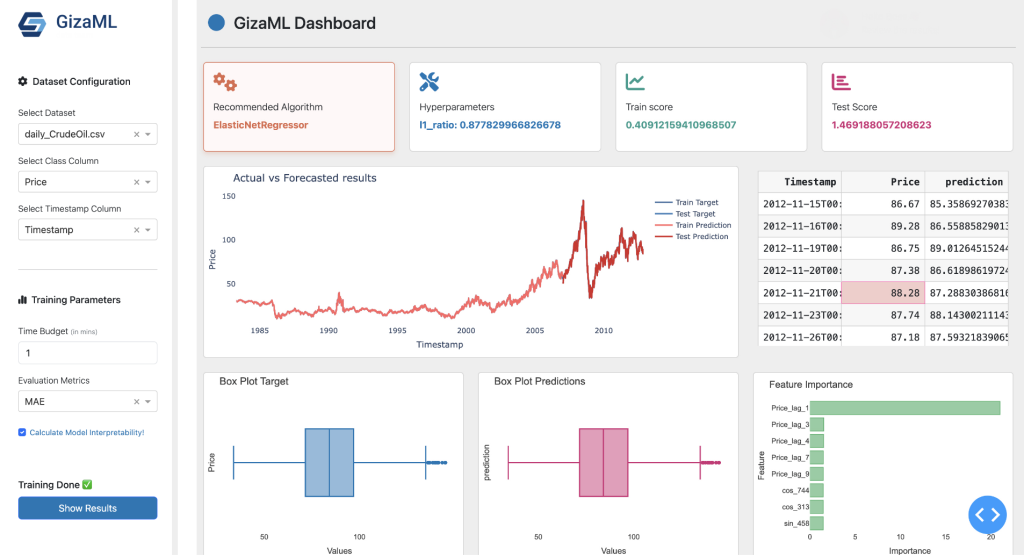

GizaML is a meta-learning-based framework designed to automate time-series forecasting by selecting the best machine learning models and tuning their hyperparameters. It operates in two main stages: first, it preprocesses the data then it uses a large language model to embed dataset characteristics and guide a meta-model in recommending effective ML pipeline configurations. GizaML supports nine regression algorithms and continuously learns from new runs to improve future recommendations

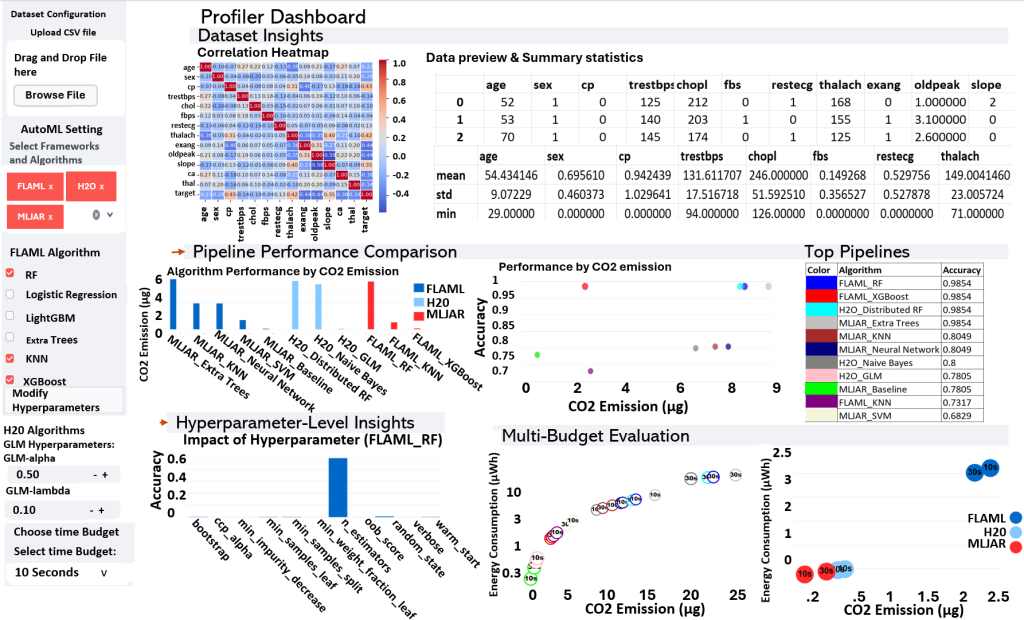

SustainaML, a lightweight visualization interface built atop FLAML, H2O, and MLJAR, enabling interactive refinement of AutoML search spaces and evaluation based on both performance and sustainability metrics. SustainaML offers flexible configurations and actionable visual feedback.

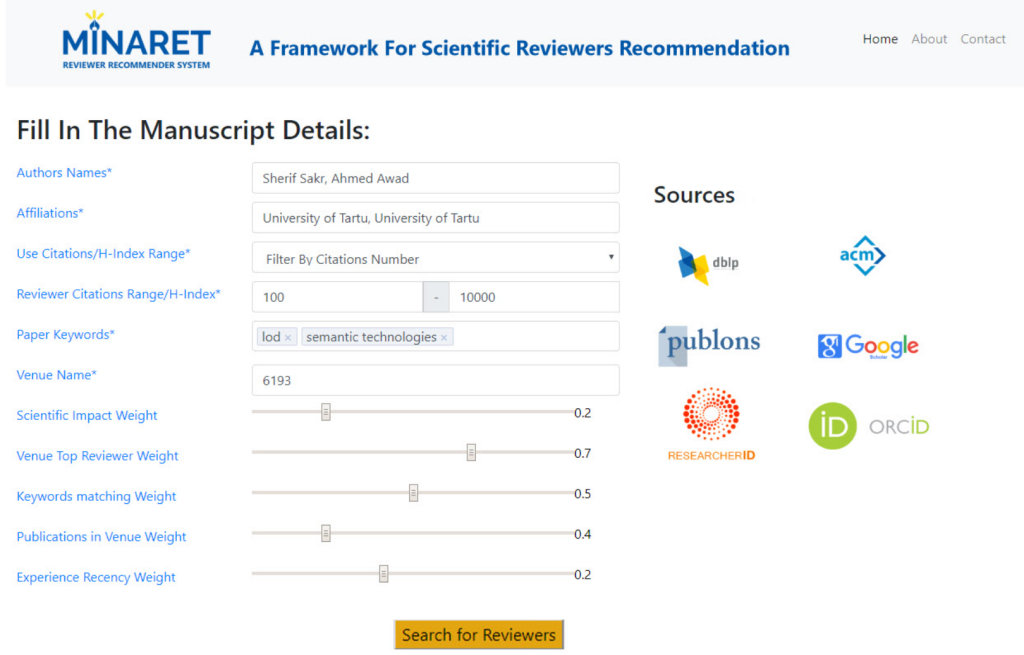

MINARET is a recommendation framework that helps journal editors streamline the reviewer selection process for scientific manuscripts. It identifies suitable reviewers by extracting up-to-date information from scholarly websites and filtering them based on topic relevance, potential conflicts of interest, and user-defined metrics. MINARET aims to make peer review more efficient and reliable by automating reviewer discovery and ranking using dynamic online data.

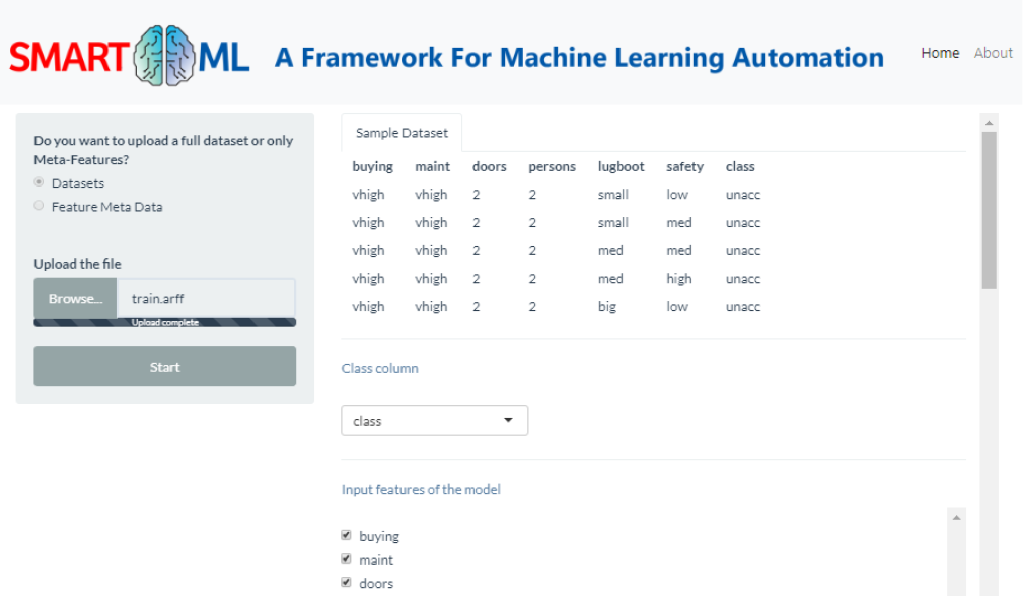

SmartML is a meta-learning-based framework designed to automate the selection and hyperparameter tuning of machine learning models. It mimics the role of a data science expert by maintaining a growing knowledge base of dataset characteristics and model performance. When given a new dataset, SmartML extracts its meta-features, retrieves the best model configuration from its knowledge base, and begins optimization. The system learns from every run, enhancing future recommendations and outperforming existing AutoML solutions.

Courses

Thesis topics

Automated Machine Learning

Novel technologies in automated machine learning ease the complexity of algorithm selection and hyperparameter optimization. However, these are usually restricted to supervised learning tasks such as classification and regression, while unsupervised learning remains a largely unexplored problem.

The goal of this thesis is to utilize meta-learning techniques to develop an interactive and explainable solution for automating machine learning for unsupervised learning.

Estimating causal effects with machine learning has become a growing research area.

Traditional ML models are designed for prediction, not causal inference, prompting the development of new approaches such as meta-learners. These decompose causal estimation into prediction tasks solvable by standard ML algorithms, which are then combined to estimate causal parameters.

This thesis aims to use meta-learning techniques to recommend suitable meta-learners for estimating heterogeneous treatment effects. Specifically, it will develop a meta-model that predicts the best meta-learner based on task characteristics, using the EconML library as a foundation.

Despite the increasing number of automated machine learning frameworks developed in recent years, none of them is directly applicable to performing efficient AutoML in an online setting. This work focuses on techniques that allocate limited computational resources to learning models while maintaining strong online performance.

The goal of this thesis is to introduce an efficient, explainable, online automated machine learning framework. Automated methods are especially crucial when dealing with streaming data, where manual data investigation can become overwhelming. The framework should empower users to develop their own reliable and trustworthy models.

Machine Learning Interpretability

Research on machine learning interpretability can be broadly divided into two main categories: conceptual and technical. In the conceptual domain, a key focus is the trade-off between interpretability and the faithful explanation of a model’s predictions. Explaining a machine learning model’s predictions in a way that is understandable to humans is known as simulatability.

Enabling machine learning systems to explain their decisions to different target user groups is a challenging task. Interpretability is domain-specific—there is no one-size-fits-all explanation. For instance, in a disease diagnosis model, doctors may understand complex medical terminology, while patients may require simpler explanations using layman’s terms.

The goal of this thesis is to develop a personalized interpretability technique that generates tailored explanations of machine learning models and their decisions, adapted to individual users.

Recently, interpretability has been receiving notable attention, especially after the General Data Protection Regulation (GDPR) imposed by the European Parliament in May 2018, which requires industries to “explain” any decision made through automated decision-making processes: “a right of explanation for all individuals to obtain meaningful explanations of the logic involved.”

The goal of this thesis is to automatically select the best interpretability technique based on the characteristics of the task and several quality measures relevant to the users.

Green Computing

Machine learning applications are constantly evolving and have become an integral part of our daily lives. However, research in this field has largely focused on producing high-precision models without considering energy constraints. This is especially true in deep learning, where the primary goal has been to improve model accuracy without accounting for computing or energy consumption.

Given the implications of climate change and the global consensus among nations, this trend is no longer sustainable. Energy efficiency must become an essential constraint in the development of machine learning models, with the goal of reducing the carbon footprint of digital technologies.

Deep learning models, such as deep neural networks, rely on hyperparameters and weights to transform input data into features. These models typically consist of two phases: the training phase and the validation phase. During model definition, one must specify parameters such as the number of layers, the size and type of each layer, and the activation functions to be used.

The aim of this thesis is to evaluate model quality based on three constraints: accuracy, performance, and energy consumption—and to recommend models that optimize all three. To achieve this, an empirical study is conducted to assess the impact of each hyperparameter on these quality constraints.

Machine learning automation has made model development more accessible and efficient, but it often comes at a significant computational and energy cost. AutoML frameworks, which automate tasks such as model selection and hyperparameter tuning, typically prioritize maximizing predictive accuracy or minimizing runtime. However, the energy consumption associated with these automated processes is rarely considered, despite growing concerns about the environmental impact of large-scale machine learning.

As the demand for sustainable AI grows and the effects of climate change become more pronounced, it is increasingly important to incorporate energy efficiency as a core constraint in AutoML workflows. Reducing the energy footprint of automated machine learning not only aligns with global sustainability goals but also makes AI more accessible in resource-constrained environments.

Hyperparameter optimization is a critical component of AutoML, often requiring extensive search over large parameter spaces. This process can be computationally intensive, leading to high energy consumption. Different optimization strategies—such as grid search, random search, Bayesian optimization, and evolutionary algorithms—vary in their efficiency and environmental impact.

The aim of this thesis is to evaluate AutoML hyperparameter optimization strategies based on three constraints: model accuracy, search efficiency, and energy consumption. The research will empirically assess how different optimization algorithms impact these constraints and will recommend approaches that achieve a balance between model quality and sustainability.

Contact us

Email: radwa.elshawi@ut.ee

Bachelor graduate

Bachelor graduate Bachelor graduate

Bachelor graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s Graduate

Master’s Graduate Master’s graduate

Master’s graduate PhD Graduate

PhD Graduate PhD Graduate

PhD Graduate PhD Graduate

PhD Graduate

Master’s Student

Master’s Student PhD Student

PhD Student PhD Student

PhD Student Lecturer of Data Management

Lecturer of Data Management Lecturer of Data Management

Lecturer of Data Management Visiting Professor

Visiting Professor Visiting Professor

Visiting Professor